Biography

I am a Postdoctoral Researcher at ETH Zurich working with Prof. Dr. Luca Benini, where I lead the machine learning research direction within the group. My work focuses on making AI accessible on the most resource-constrained devices - enabling foundation models and advanced ML systems to run on wearable biomedical devices consuming microwatts of power.

I develop efficient neural architectures that bridge the gap between large-scale foundation models and ultra-low-power edge deployment. My recent work on LUNA (NeurIPS 2025) achieves 300× reduction in computational costs while maintaining state-of-the-art performance for EEG analysis. I’m particularly interested in tiny recursion models, deep supervision techniques for time-series signals, and scalable foundation models for biosignals.

I’m looking for students and collaborators to work with me at the intersection of TinyML and biomedical AI. If you’re passionate about making AI work on edge devices, I’d love to hear from you.

- Foundation Models for Biosignals

- Tiny Recursion Models & Deep Supervision

- TinyML & Edge AI Deployment

-

Ph.D. in Electrical Engineering and Information Technology, 2025

ETH Zurich

-

M.Sc. in Electrical Engineering and Information Technology, 2020

ETH Zurich

-

B.Sc. in Electrical and Computer Engineering, 2018

University of Iceland

What I’m Currently Working On

🧠 Foundation Models for Biosignals

I’m developing large-scale pre-trained models that can understand diverse biomedical signals with minimal fine-tuning. Our LUNA model (NeurIPS 2025) achieves topology-agnostic EEG analysis with 300× fewer FLOPs and 10× less memory than traditional approaches, enabling more robust and generalizable health monitoring systems.

Recent work: LUNA at NeurIPS 2025 | Code on GitHub

🔄 Tiny Recursion Models

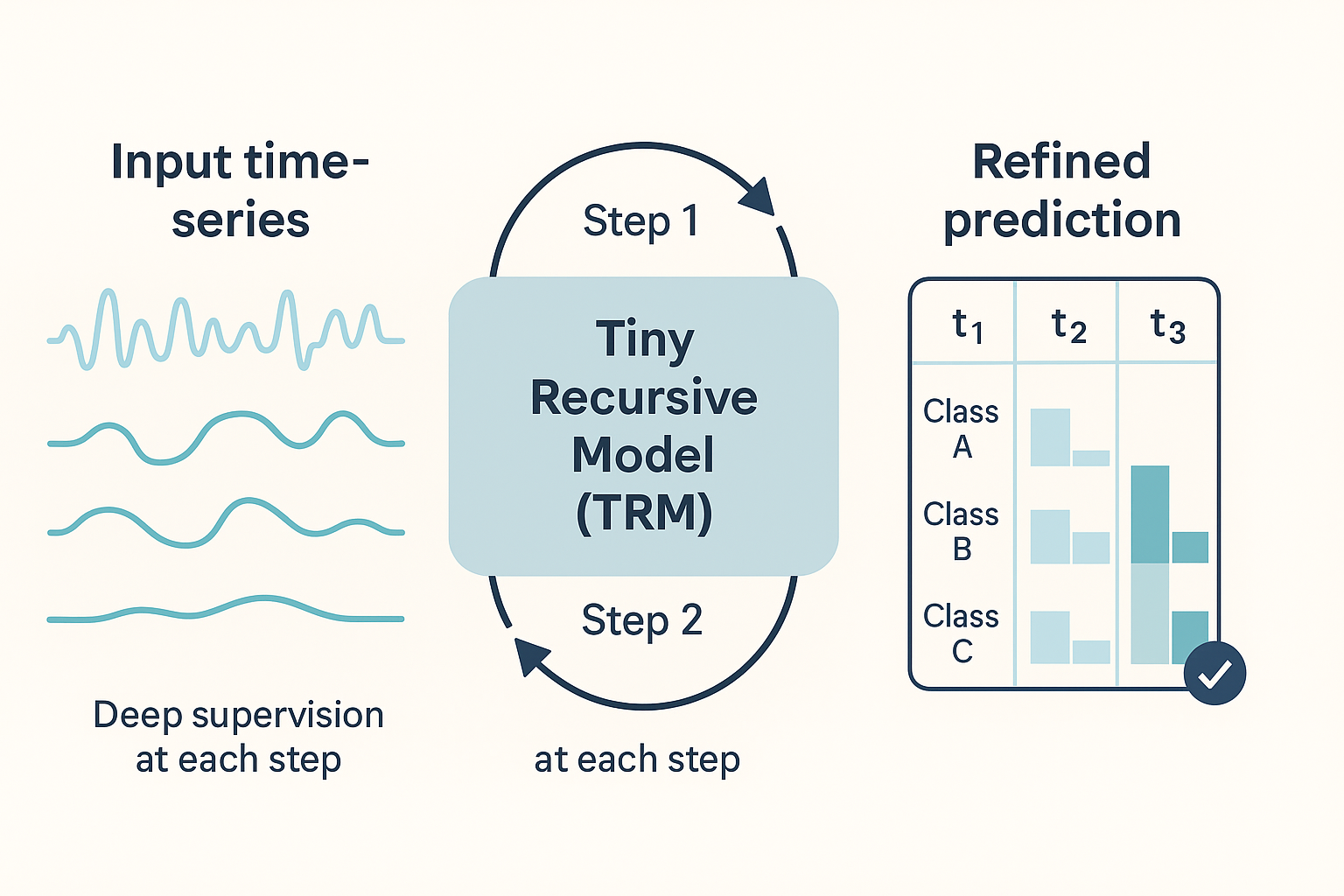

I’m investigating how deep recursion and supervision techniques can be applied to time-series biosignals to improve model efficiency and accuracy. This approach enables more sophisticated temporal modeling while maintaining the ultra-low computational budgets required for edge deployment.

Focus areas: Deep supervision, recurrent architectures, temporal feature learning

🚀 Edge AI Deployment

I’m developing hardware-aware methods to deploy foundation models and advanced ML systems on resource-constrained wearable devices. This involves co-designing algorithms and implementations to achieve microwatt-level power consumption while maintaining clinical-grade performance for applications like seizure detection and physiological monitoring.

Technologies: GAP9, RISC-V processors, TinyML optimization

Flagship Foundation Models

Scaling EEG intelligence from topology-agnostic transformers to linear-time state-space architectures

LUNA · NeurIPS 2025

Topology-agnostic EEG foundation model trained on 21k+ hours that achieves 0.921 AUROC with 300× fewer FLOPs and 10× lower memory.

FEMBA · EMBC 2025

Bidirectional Mamba EEG foundation model that scales linearly with sequence length and reaches 0.949 AUROC on TUAR.

Featured Work

🏆 LUNA: Foundation Model for EEG Analysis

Accepted at NeurIPS 2025 | 📄 Read Paper

LUNA is an efficient, topology-agnostic foundation model for EEG signal analysis that reconciles disparate electrode configurations while achieving unprecedented computational efficiency.

Key Achievements:

- 🎯 300× reduction in FLOPs compared to standard transformers

- 💾 10× less GPU memory usage

- 🏅 State-of-the-art performance: 0.921 AUROC on TUAR benchmark

- 📊 Pretrained on 21,000+ hours of diverse EEG data

- 🌍 Topology-agnostic: Works across different electrode layouts

The model uses learned queries and cross-attention mechanisms to compress multi-channel EEG into a unified latent representation, enabling practical deployment of foundation models for biosignals.

🔗 Resources

- PyTorch Lightning implementation

- Hydra configuration system

- Pre-trained model weights available

- FEMBA & LUNA architectures

- Distributed training support

- Abnormality detection

- Artifact rejection

- Slowing classification

- Emotion recognition

Available Projects for Students

🎓 Looking for Students & Collaborators

I'm looking for motivated students to work with me on TinyML and biomedical AI research. If you're passionate about making AI work on edge devices and have a strong background in machine learning, I'd love to hear from you.

Why work with me?

- 🔬 Publish at top-tier venues (NeurIPS, ICML, IEEE journals)

- 🛠️ Access to cutting-edge hardware (GAP9, embedded ML platforms)

- 🌍 Collaborate with leading research groups and industry partners

- 🎯 Work on real-world applications with practical impact

- 📚 Regular mentorship and career guidance

📋 Open MSc Thesis Topics (Snippet View)

Tiny Recursive Models for Time-Series

Adapt TRMs to non-visual domains (e.g., UCR, EEG) and analyse how deep supervision and adaptive halting impact accuracy and compute.

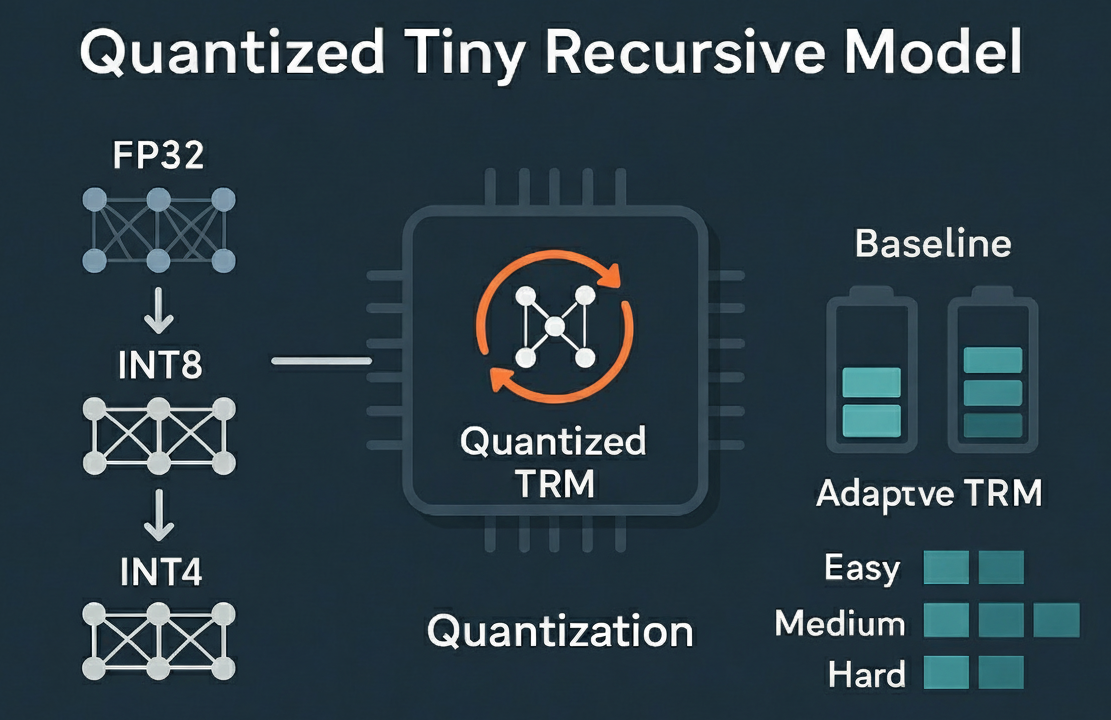

Quantized TRMs for Edge Deployment

Quantise TRMs to INT8/INT4, deploy them on GAP9 or Cortex-M, and study accuracy–energy trade-offs and on-device adaptive halting.

💡 Not Seeing Your Dream Topic?

If you’re interested in working with me but don’t see a perfect fit above, feel free to reach out. I’m always open to discussing new ideas at the intersection of:

- Foundation models for biosignals

- Tiny recursive models and deep supervision

- Hardware-aware neural architecture search

- TinyML deployment and optimisation

Projects

Recent & Upcoming Talks

Featured Publications

Recent Publications

PDF Code Dataset Open Access PDF DOI (Epilepsia) SzCORE GitHub

Contact

- thoriri@iis.ee.ethz.ch

- OAT (Andreasturm 5), Zurich, 8050

- Take the elevator to floor 16, Office U21.

- Message me on X