Reducing Neural Architecture Search Spaces with Training-Free Statistics and Computational Graph Clustering

Abstract

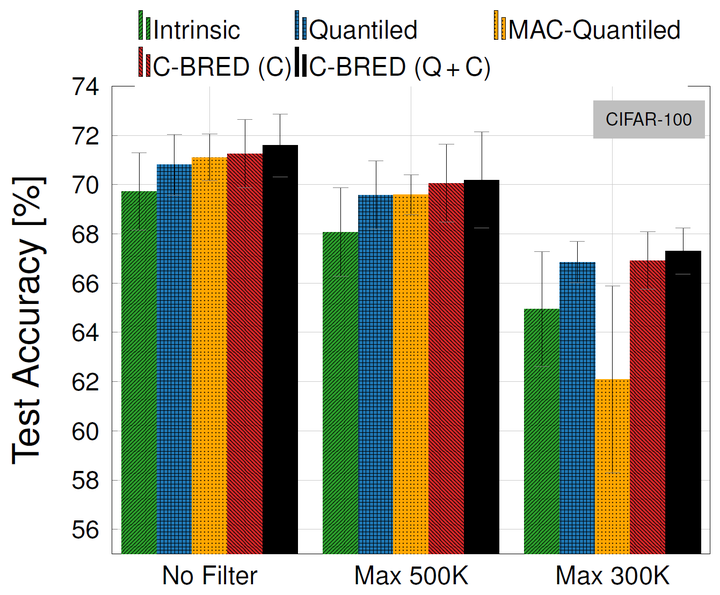

The computational demands of neural architecture search (NAS) algorithms are usually directly proportional to the size of their target search spaces. Thus, limiting the search to high-quality subsets can greatly reduce the computational load of NAS algorithms. In this paper, we present Clustering-Based REDuction (C-BRED), a new technique to reduce the size of NAS search spaces. C-BRED reduces a NAS space by clustering the computational graphs associated with its architectures and selecting the most promising cluster using proxy statistics correlated with network accuracy. When considering the NAS-Bench-201 (NB201) data set and the CIFAR-100 task, C-BRED selects a subset with 70% average accuracy instead of the whole space’s 64% average accuracy.