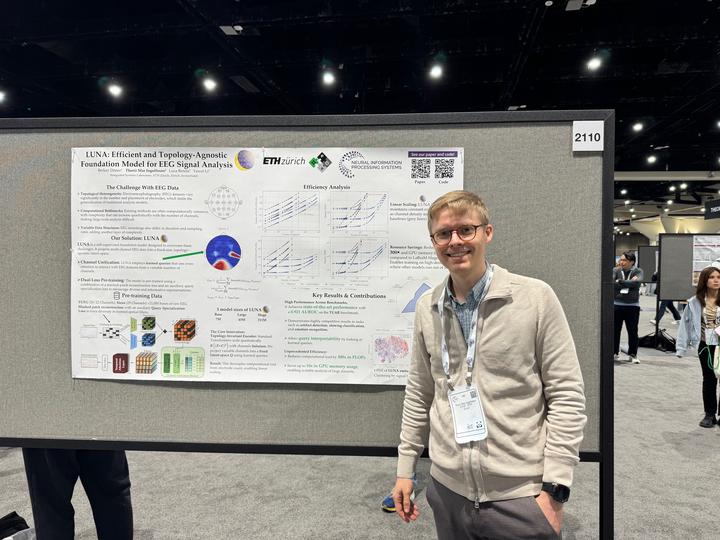

NeurIPS 2025 – LUNA Poster Presentation

Presenting the LUNA poster at NeurIPS 2025

Presenting the LUNA poster at NeurIPS 2025

Abstract

Presented our poster on LUNA (Latent Unified Network Architecture), a self-supervised foundation model that reconciles disparate EEG electrode geometries while scaling linearly with channel count. LUNA compresses multi-channel EEG into a fixed-size, topology-agnostic latent space via learned queries and cross-attention, then operates exclusively on this latent representation using patch-wise temporal self-attention—decoupling computation from electrode count. Pre-trained on TUEG and Siena (over 21,000 hours of raw EEG across diverse montages) with a masked-patch reconstruction objective, LUNA transfers effectively to four downstream tasks: abnormality detection, artifact rejection, slowing classification, and emotion recognition. It achieves state-of-the-art results on TUAR and TUSL (e.g., 0.921 AUROC on TUAR), while reducing FLOPs by 300× and GPU memory by up to 10×.

Poster highlights

- Topology-agnostic design: LUNA uses learned queries and cross-attention to compress any electrode layout into a fixed-size latent space, enabling training across heterogeneous EEG datasets without channel-specific engineering.

- Linear scaling: Patch-wise temporal self-attention operates entirely in the latent space, decoupling compute cost from electrode count—300× fewer FLOPs and 10× less GPU memory than comparable models.

- Strong transfer: Pre-trained on over 21,000 hours of TUEG and Siena EEG, LUNA achieves state-of-the-art results on TUAR (0.921 AUROC) and TUSL across abnormality detection, artifact rejection, slowing classification, and emotion recognition.

Photo gallery